This month has thrown off quite a bit of new information on Neanderthals and on the possibility that they interbred with some of the humans that came out of Africa. It's bound to stir up controversy.

The first interesting recent study comes from extracting the remains of a probable Neanderthal DNA from 38,000 years ago, copying it into bacteria, and then sequencing it at leisure. (Query: do fundamentalists oppose this procedure as a forbidden "cloning of a human"?). This bacterial-Neanderthal chimera (actually, the Neanderthal genes are really just junk DNA from the point of view of the normally functioning bacteria) is an improvement over the traditional technique that apparently destroys the original DNA in the process of sequencing it. Preserving the DNA is vital for this application: Neanderthal DNA has been severely damaged by 38,000 years of chemistry and can only be obtained with severe difficulty in small fragments.

Large-scale statistical studies from these probable Neanderthal sequences suggest that the number of genes we moderns derive from Neanderthals is few, if any. In other words, all of us trace either nearly all or entirely all of our ancestry from homo sapiens originating in Africa c. 200,000 years ago rather than to Neanderthals that originated some 700,000 years ago. Pretty much what most scientists have thought for the last few decades.

However, that does not mean humans and Neanderthals didn't interbreed, and it doesn't mean that none of us got any important genes from them. There is some fossil evidence from Portugal and Romania suggesting human-Neanderthal hybrids. Even more startling is a study published this month showing that about 70% of modern humans possess a gene for brain development (this paper goes straight for the controversy jugular!) derived from an "archaic species of homo" rather than from our African homo sapiens ancestor. Since the time of "introgression" (cross-breeding between the archaic species and the African-derived modern species, homo sapiens) was about 37,000 years ago, and the gene is overly represented in Europe and Asia but uncommon in sub-Saharan Africa (I warned you about controversy!), it's a good guess that this gene was a "gift" from a sneaky Neanderthal to the second wave of modern humans to have left Africa, the ancestors of most modern Europeans and Asians. A gift that was positively selected -- it replaced its African-derived alternative almost everywhere they were in competition -- and with which most of us are now blessed. The Neanderthal DNA sequencing study shows that the African genes won the vast majority of the other competitions.

Monday, November 27, 2006

Sunday, November 26, 2006

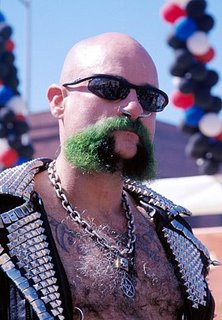

Are green beards ubiquitous?

This is a post is for those of you who are fellow fans of evolutionary theories of behavior.

This is a post is for those of you who are fellow fans of evolutionary theories of behavior.A genetic strategy has been postulated by evolutionary theorists such as William Hamilton and dubbed by Richard Dawkins the "green beard effect." This effect has been observed in nature, though only rarely has it been clearly distinguished from other recognize-and-discriminate systems.

Green beard genes are genes (or more likely small sets of linked genes) that code for the recognition of their presence in other individuals along with discriminatory favoritism of those individuals. Kin altruism, although quite common and treated in this article as a distinct effect, can be thought of as a special case of the green beard effect. In kin altruism genes "recognize themselves" only in probabalistic assemblages, i.e. by degree of kinship (your children and siblings are guaranteed to share 1/2 of your uncommon mutations, nieces and nephews and grandchildren are 1/4 related to you, etc. Note that we are counting new and thus still uncommon mutations, not stable genes: the important question is what will favor or disfavor new mutations. Evolutionary pressure takes ubiquitous genes for granted).

"Green beard" is a misleading name as it has led scientists to look for blatant signals, but as I argue below the effects are much more likely to be subtle and parasitical on other kinds of recognition systems. (I don't know to what extent, if any, the following "green beard"/"blue beard" theory of mine is original, as I haven't thoroughly researched this literature and the theory is at least partially suggested by the papers I have read and cite here). Most evolutionary scientists have thought green beard effects would be rare because they have generally not found such blatant signals associated with clear green beard effects and because green beard effects require the simultaneous appearance of three distinct yet genetically linked traits:

(1) a detectable feature of the phenotype,

(2) the ability to recognize this feature (or the lack of it) in others, AND

(3) different behavior towards individuals possessing the feature versus those not

This argument for green beard rarity is flawed, because a wide variety of recognition systems already exist that can readily give rise to green beard genes, some of the main ones being:

+ gender recognition and sexual selection (the tendency to prefer some characteristics in mates and avoid others)

+ conspecific recognition (distinuishing own species from others)

+ kin recognition for kin altruism

+ maternal recognition of self vs. fetus

Let's call these "normal" recognition systems "blue beards." They are just as blatant or subtle as signals as "green beards." Any recognition system is imperfect: it is coded for by only some of the genes it benefits, and some of these genes will be more strongly linked than others. The more strongly linked genes automatically favor each other: they are green beard genes. Thus, almost any recognize-and-distriminate system can be expected to give rise to the following green beard cycle:

Let's call these "normal" recognition systems "blue beards." They are just as blatant or subtle as signals as "green beards." Any recognition system is imperfect: it is coded for by only some of the genes it benefits, and some of these genes will be more strongly linked than others. The more strongly linked genes automatically favor each other: they are green beard genes. Thus, almost any recognize-and-distriminate system can be expected to give rise to the following green beard cycle:(1) green beard genes arise within an existing recognize-and-discriminate system (the blue beard genes) and spread in the population,

(2) as the green beard genes spread, the recognition system becomes noisier for unlinked (blue beard) genes,

(3) the noise has enough negative impact on unlinked blue beard genes (beneficiaries of the original recognition system) that the blue beard genes develop modifiers to suppress the green beards.

Alternatively, the green beard genes might have only a slightly deleterious effect on unlinked genes and thus might succeed. Consider the following hypothetical: a recognition system in a hypothetical cuckoo, a brood parasite, is under genetic pressure to favor blue or green tufts as these are the best ways (and blue is slightly better than green) for our hypothetical cuckoos as adults to recognize each other as distinct from their host species' adults which are red. The gene coding for the green tuft, however, is linked to the recognize-and-discriminate genes while a different gene coding for the blue tuft is unlinked. The green beard effect favors the green tuft even though is is slightly worse than the blue tuft as a signal recognition device.

A similar effect might help explain runaway sexual selection for expensive features such as peacock tails: in any sexual selection process, signal genes linked to signal recognition genes will favor each other until they become too expensive for unlinked genes: it's an arms race between the linked green beard genes and the unlinked green beard supression genes, and it may be that full elimination of the green beards is often not the equilibrium. Indeed complete elimination can't be the equilibrium since recognition systems are far from perfect, but almost complete elimination is probably the normal equilibrium. Some examples of exhorbitant display may be green beard genes effects which persist because of the unavailability of mutations for the unlinked genes to modify and thus suppress the green beard genes.

We thus can expect a red queen type arms race between green beard genes and the blue beard genes. That is just what has recently been found in the mother-fetus discrimination system (wherein the mother's cells distinguish mother cells from fetus cells and treat them differently). I expect far more effects like these to be found.

Sometimes green beard genes exist in equilibrium with a counter-effect such as lethal recessives. This has actually been observed in the red fire ant. In this ant, the kin recognition system, normally used by worker ants to recognize and kill unrelated queens, has given rise to green beard gene(s). One of these genes, a pheremone-coding gene, is always part of the green beard: at this gene locus "bb" is a lethal recessive, but "BB" also turns out to be lethal because "Bb" workers reliably recognize and kill "BB" queens just before they lay their first eggs. Thus "B" and "b" exist in equilibrium.

Sometimes green beard genes exist in equilibrium with a counter-effect such as lethal recessives. This has actually been observed in the red fire ant. In this ant, the kin recognition system, normally used by worker ants to recognize and kill unrelated queens, has given rise to green beard gene(s). One of these genes, a pheremone-coding gene, is always part of the green beard: at this gene locus "bb" is a lethal recessive, but "BB" also turns out to be lethal because "Bb" workers reliably recognize and kill "BB" queens just before they lay their first eggs. Thus "B" and "b" exist in equilibrium.Only a few green beard genes have been observed in nature so far because (1) they are almost always associated with and parasitical on an existing recognize-and-discrimate systems (blue beards), and it is hard for observers to distinguish green beards from blue beards, and (2) they are mostly transient in nature: they are either soon (in evolutionary time) suppressed by blue beard genes or soon come to dominate the population, destroy the original recognition system, and become evolutionarily functionless (i.e. they don't need to code for anything any more once competition is eliminated, so they become just more junk DNA). An equilibrium like that reached in the fire ant will be a rarity.

Tuesday, November 21, 2006

Underappreciated (ii)

The potential for the application of discrete mathematics beyond computer science: in biology, economics, sociology, etc.

Hal Finney's Reusable Proofs of Work is important for at least two reasons: as a good stab at implementing bit gold, and for its use of the important idea of transparent servers: using remote attestation to verify the code running on servers.

Hal Finney's Reusable Proofs of Work is important for at least two reasons: as a good stab at implementing bit gold, and for its use of the important idea of transparent servers: using remote attestation to verify the code running on servers.

The role of Stockholm syndrome in politics. Left as an exercise for the student. :-)

Three "silent" movies which I've had the good fortune to see on the big screen with live music: The Merry Widow, The Phantom of the Opera, and Safety Last.

Hal Finney's Reusable Proofs of Work is important for at least two reasons: as a good stab at implementing bit gold, and for its use of the important idea of transparent servers: using remote attestation to verify the code running on servers.

Hal Finney's Reusable Proofs of Work is important for at least two reasons: as a good stab at implementing bit gold, and for its use of the important idea of transparent servers: using remote attestation to verify the code running on servers.The role of Stockholm syndrome in politics. Left as an exercise for the student. :-)

Three "silent" movies which I've had the good fortune to see on the big screen with live music: The Merry Widow, The Phantom of the Opera, and Safety Last.

Sunday, November 19, 2006

Underappreciated

Given that I subscribe to the law of the dominant paradigm, I have a soft spot in my heart for ideas that haven't gotten the attention they deserve. I try to choose such ideas to blog on. But I can't give all such ideas that I know of the necessary attention. Here are some:

Richard Dawkins himself is by now overexposed, but in his quest to convert the world to the atheist meme he, along with most of the rest of the world, has neglected his own theory of the extended phenotype. Like the metaphor of the selfish gene, this is an insight with many very important consequences. So far we've just scratched the tip of this iceberg.

Yoram Barzel is one of the most important economists of the last half century, but few people know this. Along with the much more famous Friedrich Hayek, Barzel is probably the economist who has influenced me the most. He has had a number of important insights related to transaction costs which I've confirmed repeatedly in my own observations and readings in economic history. Probably his most important insights relate to the importance and nature of value measurement. (“Measurement Cost and the Organization of Markets,” Journal of Law and Economics, April 1982 Reprinted in The Economic Foundations of Property Rights: Selected Readings, SPejovich, ed., Edward Elgar Publishing Company, 1997. And in Transaction costs and Property rights, Claude Menard, Editor, Edward Elgar publishing Company, 2004.) Another is his analysis of waiting in line (queuing) versus market prices as two methods of rationing. Waiting in line is the main means of rationing in a socialist economy but is surprisingly widespread even in our own. (“A Theory of Rationing by Waiting,” Journal of Law and Economics, April 1974 Reprinted in Readings in Microeconomics, Breit, Hochman, and Sauracker, 3rd ed., 1986).

Advanced cryptography over the last twenty-five years has come with a large number of important protocols. Public key encryption got all the hype, but it was just a warm-up. Alas, the perceived esoteric nature of the field and magical function of its protocols has apparently intimidated regular programmers away from implementing most of them. Appearances to the contrary they are based on solid math, not magic. I've blogged on some of these, but there is far more out there waiting to be picked up by mainstream computer security. Of all the advanced cryptography protocols secure time-stamping and multiparty secure computation are probably the most important.

The ideas of voluntary oblivious compliance and admonition systems from the capability security community. These are very useful in elucidating interelationships between wet protocols (e.g. manual procedures and law) and security. (By contrast I think capability security itself, and indeed the entire area of access control, is over-hyped: there are important roles for such things, for example in internal corporation access control, secure operating systems, and services where trust by all parties in corporate systems administrators is satisfactory, but they are nowhere close to being the be-all and end-all of distributed computing as often seems to be implied. Rather it's advanced cryptography where the most important advances in distributed security lie).

The whole topic of franchise jurisdiction, crucial to understanding medieval and Renaissance English law, has nevertheless long been almost completely neglected. It is also a very different paradigm of the power of courts that has been forgotten by the modern legal and political communities in favor of the Roman model of governmental sovereignty.

Almost any topic that requires knowledge from more than one academic specialty has been overly neglected: so much so that most such topics don't even exist but should.

The specific ideas mentioned are just the tip of the iceberg of underappreciated topics. Please let me know what ideas you think have been the most underappreciated. There are, I'm sure, many, many ideas out there that I should know about but don't.

Richard Dawkins himself is by now overexposed, but in his quest to convert the world to the atheist meme he, along with most of the rest of the world, has neglected his own theory of the extended phenotype. Like the metaphor of the selfish gene, this is an insight with many very important consequences. So far we've just scratched the tip of this iceberg.

Yoram Barzel is one of the most important economists of the last half century, but few people know this. Along with the much more famous Friedrich Hayek, Barzel is probably the economist who has influenced me the most. He has had a number of important insights related to transaction costs which I've confirmed repeatedly in my own observations and readings in economic history. Probably his most important insights relate to the importance and nature of value measurement. (“Measurement Cost and the Organization of Markets,” Journal of Law and Economics, April 1982 Reprinted in The Economic Foundations of Property Rights: Selected Readings, SPejovich, ed., Edward Elgar Publishing Company, 1997. And in Transaction costs and Property rights, Claude Menard, Editor, Edward Elgar publishing Company, 2004.) Another is his analysis of waiting in line (queuing) versus market prices as two methods of rationing. Waiting in line is the main means of rationing in a socialist economy but is surprisingly widespread even in our own. (“A Theory of Rationing by Waiting,” Journal of Law and Economics, April 1974 Reprinted in Readings in Microeconomics, Breit, Hochman, and Sauracker, 3rd ed., 1986).

Advanced cryptography over the last twenty-five years has come with a large number of important protocols. Public key encryption got all the hype, but it was just a warm-up. Alas, the perceived esoteric nature of the field and magical function of its protocols has apparently intimidated regular programmers away from implementing most of them. Appearances to the contrary they are based on solid math, not magic. I've blogged on some of these, but there is far more out there waiting to be picked up by mainstream computer security. Of all the advanced cryptography protocols secure time-stamping and multiparty secure computation are probably the most important.

The ideas of voluntary oblivious compliance and admonition systems from the capability security community. These are very useful in elucidating interelationships between wet protocols (e.g. manual procedures and law) and security. (By contrast I think capability security itself, and indeed the entire area of access control, is over-hyped: there are important roles for such things, for example in internal corporation access control, secure operating systems, and services where trust by all parties in corporate systems administrators is satisfactory, but they are nowhere close to being the be-all and end-all of distributed computing as often seems to be implied. Rather it's advanced cryptography where the most important advances in distributed security lie).

The whole topic of franchise jurisdiction, crucial to understanding medieval and Renaissance English law, has nevertheless long been almost completely neglected. It is also a very different paradigm of the power of courts that has been forgotten by the modern legal and political communities in favor of the Roman model of governmental sovereignty.

Almost any topic that requires knowledge from more than one academic specialty has been overly neglected: so much so that most such topics don't even exist but should.

The specific ideas mentioned are just the tip of the iceberg of underappreciated topics. Please let me know what ideas you think have been the most underappreciated. There are, I'm sure, many, many ideas out there that I should know about but don't.

Friday, November 10, 2006

Towards smarter democracy

Bryan Caplan has a great article on the irrationality of voters. (No, it's not a commentary on the most recent American election, which was probably a smidgen more rational than most, on balance). The basic idea, which I find unimpeachable except in one area, is that voters are almost never foreseeably impacted by their own votes. Single votes almost never change the outcome of elections. Even if they did they would rarely impact the voter or others in a way the voter clearly foresees. Given this disconnect between vote and consequence, votes instead are based on how good they make the voter feel. People vote in the first place, and then decide what to vote on, based almost entirely on their emotional needs rather than on a rational analysis of the kind that, for example, an engineer brings to designing software, an economist brings to crafting monetary policy, a lawyer brings to drafting a contract, a judge brings to interpreting law, or even that a boss or HR person brings to hiring a new employee.

I'd add that people use their political opinions to signal things they'd like their acquaintances to believe about their personality. For example, people support aid to the poor to signal to their friends and co-workers that they are generous people, and thus good people to be friends or co-workers with. The actual impact of any resulting policy on poor people or taxpayers or others generally remains unobserved by friends and co-workers and thus is irrelevant. Even if a friend believes the policy is mistaken, but that the opiner's heart is in the right place, this is sufficient for successful signaling. It's coming across as having a genuine desire to help the poor that counts. Similarly, people are motivated to vote in order to signal their altruistic participation in the community.

The one area Caplan fails to explore is very small-scale elections. I've long thought that juries, for example, are a much better example of democracy than large-scale elections. Jurors are forced to take the time to learn the facts (thus overcoming rational ignorance) and have a real case at hand (thus overcoming much of the mismatch between social signaling to ignorant friends and reality). They can clearly foresee the impact their verdict will have on the defendant. While Caplan extols the virtues of judges and other experts like himself (he'd like to have a Supreme Court of Economists to overturn bad economic legislation), such a Platonic oligarchy of experts needs to be checked and balanced by democracy. Juries, and the right of juries to judge law as well as fact (and for lawyers to argue law as well as fact in front of the jury), along with an unimpeachable right to trial by jury, are a much better way than large-scale elections to check oligarchy.

I'd add that people use their political opinions to signal things they'd like their acquaintances to believe about their personality. For example, people support aid to the poor to signal to their friends and co-workers that they are generous people, and thus good people to be friends or co-workers with. The actual impact of any resulting policy on poor people or taxpayers or others generally remains unobserved by friends and co-workers and thus is irrelevant. Even if a friend believes the policy is mistaken, but that the opiner's heart is in the right place, this is sufficient for successful signaling. It's coming across as having a genuine desire to help the poor that counts. Similarly, people are motivated to vote in order to signal their altruistic participation in the community.

The one area Caplan fails to explore is very small-scale elections. I've long thought that juries, for example, are a much better example of democracy than large-scale elections. Jurors are forced to take the time to learn the facts (thus overcoming rational ignorance) and have a real case at hand (thus overcoming much of the mismatch between social signaling to ignorant friends and reality). They can clearly foresee the impact their verdict will have on the defendant. While Caplan extols the virtues of judges and other experts like himself (he'd like to have a Supreme Court of Economists to overturn bad economic legislation), such a Platonic oligarchy of experts needs to be checked and balanced by democracy. Juries, and the right of juries to judge law as well as fact (and for lawyers to argue law as well as fact in front of the jury), along with an unimpeachable right to trial by jury, are a much better way than large-scale elections to check oligarchy.

Wednesday, November 08, 2006

A raw idea to avoid being cooked

I come up with far more ideas than I have time to properly evaluate, much less pursue. Long ago I worked at the Jet Propulsion Laboratory and I still occasionally daydream about space stuff. Recently there have been several proposals for shading the earth from the sun, put forth to combat global warming. I've been noodling on my own sunshade idea for several years. For your entertainment (and who knows, it might also turn out to be quite useful) here it is:

Put a bunch of Venetian blinds at a spot permanently between the earth and the sun (know to orbital engineers as the first earth-sun Lagrange point, a.k.a. "L1"). These blinds would be refractive rather than reflective to minimize the stationkeeping needed to prevent being "blown" away by the pressure of sunlight. At L1 they only need to refract the light by less than one degree of angle for the light to miss the earth. Less than 2% of sunlight needs to be deflected away from earth to offset the expected global warming from "greenhouse gases," primarily carbon dioxide.

Venetian blinds, unlike this proposal, and this one, can be opened or closed to allow continued control over the amount of sunlight hitting earth. This is essential since we can't really predict with great accuracy the degrees of global warming that we will need to combat. The blinds I propose, rather than being a number of joined slats as in a normal Venetian blind, are simply a large number of separate satellites each with a refractive slat that can be manuevered to any angle with the sun (i.e. from fully "closed" vertical to fully "open" horizontal).

I propose that the blinds be placed precisely at the region where they will deflect primarily light headed for equatorial regions on earth. That will even out temperatures a bit between northern and equatorial latitudes and reduce the energy (caused by temperature differences) available for storm formation, and thus probably the intensity of storms (although the effect, like the effect of global warming itself, on storms may be negligible). But the blinds should be maneuverable enough, by using solar electric propulsion or "sailing" on sunlight pressure, to redeploy to deflect sunlight headed to extreme latitudes if polar ice sheet melting becomes a larger problem than equatorial heat.

Instead of launching these vast (or vast number of) panels from the very deep gravity well of earth or manufacturing them on the moon (which probably lacks the proper organic ingredients and even sufficient water, a crucial industrial input), we can extract the raw materials from any near-earth asteroids containing water and methane ice or, if there are none such, from Jupiter-family comets. Besides the proper raw materials being available in sufficient volume in such ice, but probably not on the moon, there are a number of other advantages to microgravity ice mining explained in that linked article. If extracting material from comets and manufacturing the blinds can be automated the entire vast project can be conducted by launching just a few dozen of today's rockets using the recently-proven solar electric upper stages; otherwise it will require manned missions similar to those recently proposed to Mars, but again using solar-electric upper stages. (Solar electric rockets are only required for the first trip; much lower cost solar-thermal ice rockets can be used in subsequent trips, again as explained in that linked article).

Of course shades don't combat other effects of excess carbon dioxide such as ocean acidification and faster and differential plant growth, but these effects alone do not justify the vast costs of reducing carbon dioxide emissions. Indeed faster plant growth is probably a major economic benefit.

My off-the-cuff estimate of cost, which is probably as good as any recent NASA estimate for any of their gigaprojects, is that the project would cost $10 billion per year over 50 years. That's for the manned version; if it could be automated it would cost far less. This is far cheaper than other space-based sunshade proposals because once initial capital costs are made and the first two or three bootstrapping cycles have been undertaken, the ongoing transport costs of material from comet to the earth-sun L1 point are extremely small.

As a side benefit it would (unlike a mission to the moon or Mars or a "base" or any similar project) develop infrastructure needed for large-scale space industry and colonization. I propose funding the project, not with a tax, but by treating the blinds like a carbon dioxide sink and auctioning extra carbon dioxide credits for a global carbon dioxide market, since the shade allows us to be far less drastic in reducing growth of carbon dioxide emissions. If instead of a market we go with carbon taxes the project could be funded from those. $10 billion per year is much lower than even the most optimistic estimates (at 1% of world GDP about $500 billion per year) of the costs of reducing carbon dioxide emissions. Furthermore, there are a variety of other markets for large-scale industry that can be exploited once the basic ice mining infrastructure is developed: space tourism and making manned missions and other transportation around the solar system vastly less expensive, for starters, as well as providing propellant, tankage, and industrial raw materials in earth orbits. As I stated in 1994:

Put a bunch of Venetian blinds at a spot permanently between the earth and the sun (know to orbital engineers as the first earth-sun Lagrange point, a.k.a. "L1"). These blinds would be refractive rather than reflective to minimize the stationkeeping needed to prevent being "blown" away by the pressure of sunlight. At L1 they only need to refract the light by less than one degree of angle for the light to miss the earth. Less than 2% of sunlight needs to be deflected away from earth to offset the expected global warming from "greenhouse gases," primarily carbon dioxide.

Venetian blinds, unlike this proposal, and this one, can be opened or closed to allow continued control over the amount of sunlight hitting earth. This is essential since we can't really predict with great accuracy the degrees of global warming that we will need to combat. The blinds I propose, rather than being a number of joined slats as in a normal Venetian blind, are simply a large number of separate satellites each with a refractive slat that can be manuevered to any angle with the sun (i.e. from fully "closed" vertical to fully "open" horizontal).

I propose that the blinds be placed precisely at the region where they will deflect primarily light headed for equatorial regions on earth. That will even out temperatures a bit between northern and equatorial latitudes and reduce the energy (caused by temperature differences) available for storm formation, and thus probably the intensity of storms (although the effect, like the effect of global warming itself, on storms may be negligible). But the blinds should be maneuverable enough, by using solar electric propulsion or "sailing" on sunlight pressure, to redeploy to deflect sunlight headed to extreme latitudes if polar ice sheet melting becomes a larger problem than equatorial heat.

Instead of launching these vast (or vast number of) panels from the very deep gravity well of earth or manufacturing them on the moon (which probably lacks the proper organic ingredients and even sufficient water, a crucial industrial input), we can extract the raw materials from any near-earth asteroids containing water and methane ice or, if there are none such, from Jupiter-family comets. Besides the proper raw materials being available in sufficient volume in such ice, but probably not on the moon, there are a number of other advantages to microgravity ice mining explained in that linked article. If extracting material from comets and manufacturing the blinds can be automated the entire vast project can be conducted by launching just a few dozen of today's rockets using the recently-proven solar electric upper stages; otherwise it will require manned missions similar to those recently proposed to Mars, but again using solar-electric upper stages. (Solar electric rockets are only required for the first trip; much lower cost solar-thermal ice rockets can be used in subsequent trips, again as explained in that linked article).

Of course shades don't combat other effects of excess carbon dioxide such as ocean acidification and faster and differential plant growth, but these effects alone do not justify the vast costs of reducing carbon dioxide emissions. Indeed faster plant growth is probably a major economic benefit.

My off-the-cuff estimate of cost, which is probably as good as any recent NASA estimate for any of their gigaprojects, is that the project would cost $10 billion per year over 50 years. That's for the manned version; if it could be automated it would cost far less. This is far cheaper than other space-based sunshade proposals because once initial capital costs are made and the first two or three bootstrapping cycles have been undertaken, the ongoing transport costs of material from comet to the earth-sun L1 point are extremely small.

As a side benefit it would (unlike a mission to the moon or Mars or a "base" or any similar project) develop infrastructure needed for large-scale space industry and colonization. I propose funding the project, not with a tax, but by treating the blinds like a carbon dioxide sink and auctioning extra carbon dioxide credits for a global carbon dioxide market, since the shade allows us to be far less drastic in reducing growth of carbon dioxide emissions. If instead of a market we go with carbon taxes the project could be funded from those. $10 billion per year is much lower than even the most optimistic estimates (at 1% of world GDP about $500 billion per year) of the costs of reducing carbon dioxide emissions. Furthermore, there are a variety of other markets for large-scale industry that can be exploited once the basic ice mining infrastructure is developed: space tourism and making manned missions and other transportation around the solar system vastly less expensive, for starters, as well as providing propellant, tankage, and industrial raw materials in earth orbits. As I stated in 1994:

If the output of the icemaking equipment is high, even 10% of the original mass [returned to earth orbit by rockets using water propellant extracted from the comet ice] can be orders of magnitude cheaper than launching stuff from Earth. This allows bootstrapping: the cheap ice can be used to propel more equipment out to the comets, which can return more ice to Earth orbit, etc. Today the cost of propellant in Clarke [geosynchronous] orbit, the most important commercial orbit, is fifty thousand dollars per kilogram. The first native ice mission might reduce this to a hundred dollars, and to a few cents after two or three bootstrapping cycles.The savings for transporting materials to the earth-sun L1 point, instead of launching them from earth, are even larger.

Wednesday, November 01, 2006

Robot law

To what extent can law be mechanized? This author, Mark Miller, Lawrence Lessig, and others have proposed that software code can provide a substitute, to a greater or lesser degree, for various kinds of legal rules, especially in new software-driven areas such as the Internet. Indeed software increasingly provides a de facto set of law-like rules governing human interaction even when we don't intend such a result.

This post proposes a framework for mechanical laws in physical spaces inspired by the traditional English common law of trespass (roughly what today is called in common law countries the "intentional torts," especially trespass and battery).

Several decades ago, Isaac Asimov posited three laws to be programmed into robots:

Thus I propose to substitute for Asimov's Laws a framework which (1) is based on a real-world, highly evolved system of law that stressed concrete and objective rules rather than abstract, ambiguous, and subjective ethical precepts, (2) is "peer-to-peer" rather than "master-servant", and (3) is based, as much as possible, not on hypothetical human-like "artificial intelligence," but on a framework much of which can be tested with hardware and simple algorithms resembling those we possess today. Indeed, a prototype of this system can probably be developed using Lego(tm) robot kits, lasers, photosensors, sonar, video cameras, and other off-the-shelf hardware along with some custom computer programming using known algorithms.

Using such a prototype framework I hope that we can test out new legal rules and political systems in contests without harming actual humans. I also hope that we can test the extent to which law can be mechanized and to which security technology can prevent or provide strong evidence for breaches of law.

What about possible application to real-world security systems and human laws? In technical terms, I don't expect most of these mechanical laws to be applied in a robotic per se fashion to humans, but rather mostly to provide better notice and gather better evidence, perhaps in the clearest cases creating a prima facie case shifting the burden of lawsuit and/or the burden of proof to the person against whom clear evidence of law-breaking has been gathered. The system may also be used to prototype "smart treaties" governing weapons systems.

The main anthropomorphic assumption we will make is the idea that our robots can be motivated by incentives, e.g. by the threat of loss of property or of personal damage or destruction. If this seems unrealistic, think of our robots as being agents of humans who after initially creating the robots are off-stage. Under contest rules the humans have an incentive to program their robots to avoid damage to themselves as well as to avoid monetary penalties against their human owners; thus we can effectively treat the robots as being guided (within the limits of flexibility and forethought of simple algorithms) by such incentives.

The traditional trespasses are based on surfaces: a trespass on real property breaks a spatial boundary of that property, and a battery touches the skin or clothes or something closely attached to same (such as a tool grasped in the hand). Corresponding to such laws we have have developed a variety of security devices such as doors, locks, fences, etc. that provide notice of a surface and sometimes provide evidence that such a surface has been forceably crossed. The paradigmatic idea is "tres-pass", Law French for "big step": a crossing of a boundary that, given the notice and affordances provided, the trespasser knew or should have know was a violation of law. In our model, robots should have been programmed to not cross such boundaries without owner consent or other legal authority, ideas to be explored further below.

One way to set up this system is as contests between human programmers deploying their robots into the robot legal system. In a partially automated system there would be some human-refereed "police" and "courts," but an ideal fully-automated version would run without human intervention. Under contest rules in the fully-automated mode, after the human creators, who had an incentive to program the robots to respond to incentives, are done with their programming and the contest has commenced, only robots and other things exist in this robotic legal system. Only robots are legal persons; as such they can own other things and devices as well as spatial regions and things fixed therein (real property).

For each type of tort in my robot legal system, I propose a class of surface or other physical condition and correspondong class(es) of sensors:

(1) battery : physical surface of the robot (sensor detects touch). This touching must be the result of a physical trajectory that was probably not accidental (e.g. bumping a fellow robot on within a crowded space is probably not a battery).

(2) trespass to real property : three-dimensional surface of laser-break sensors bounding a space owned by a robot, or other location-finding sensors that can detect boundary crossings.

(3) trespass or conversion of moveable property: involves touch sensors for trespass to chattels; security against coversion probably also involves using RFID, GPS, or more generally proplets in the moveable property. But this is a harder problem, so call it TBD.

(4) assault is a credible threat of imminent battery : probably involves active and passive viewing devices such as radar, sonar and video and corresponding movement detection and pattern recognition. Specifics TBD (another harder problem).

Note that we have eliminated subjective elements such as "intent" and "harm." The idea is to replace these with deductiions from physical evidence that can be gathered by sensors. For example, evidence of a boundary crossing with force often in itself (per se) implies probable intent, and in such cases intent can be dispensed with as a conjunctive element or can be automatically inferred. With well-designed physical triggering conditions and notices and affordances to avoid them, we can avoid subjective elements that would require human judgment and still reach the right verdict in the vast majority of cases. In real world law such notices, affordances, and evidence-gathering would allow us to properly assign the burden of lawsuit to the prima facie lawbreaker so that a non-frivolous lawsuit would only occur in a miniscule fraction of cases. Thus security systems adapted to and even simulating real-world legal rules could greatly lower legal costs, but it is to be doubted that they could be entirely automated without at least some residual injustice.

Our robotic contract law is simply software code that the robots generated (or their human programmers have previously coded) and agree to abide by, i.e. smart contracts.

Three basic legal defenses (or justifications) for a surface break, taking of moveable property, or assault are:

(1) consent by the owner, expressed or implied (the former requires only some standard protocol that describes the surface and conditions for activity within that surface, i.e. a smart contract; I'm not sure what the latter category, implied consent, is in robot-land, but the "bumping in a crowded space" is probably an example of implied consent to a touch).

(2) self-defense (which may under certain circumstances include defense of property if the victim was illegally within a surface at the time), or

(3) other legal authorization.

The defense (3) of legal authorization is the most interesting and relates strongly to how robots are incentivized, i.e. punished and how robots are compensated for damages. In other words, what are the "police" and "courts" that enforce the law in this robotic world? Such a punishment is itself an illegal trespass unless it is legally authorized. The defense of legal authorization is also strongly related to the defense (2) of self-defense. That will be the topic, I hope, of a forthcoming post.

This post proposes a framework for mechanical laws in physical spaces inspired by the traditional English common law of trespass (roughly what today is called in common law countries the "intentional torts," especially trespass and battery).

Several decades ago, Isaac Asimov posited three laws to be programmed into robots:

I reject these as an approach for analyzing the mechanization of law because (i) they represent a law of robot servants towards human masters, which is quite different from my present goal of analyzing the mechanization of laws among equals (whether equal as fellow property or equal as fellow property owner), and (ii) they are hopelessly subjective and computationally intractible. How does a robot determine when a human has been "harmed"? Even if that problem was solved how does a robot predict when a human will come to harm, much less how to avoid their own actions causing such harm, much less what actions will lead to avoidance of that harm? While Asimov attempted to address some of these issues in various oversimplified scenarios in his stories, this is no substitute for the real-world experience of actual disputes and settlements, and the resulting precedents and the evolution of rules.1. A robot may not injure a human being, or, through inaction, allow a human being to come to harm.

2. A robot must obey orders given it by human beings, except where such orders would conflict with the First Law, and

3. A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

Thus I propose to substitute for Asimov's Laws a framework which (1) is based on a real-world, highly evolved system of law that stressed concrete and objective rules rather than abstract, ambiguous, and subjective ethical precepts, (2) is "peer-to-peer" rather than "master-servant", and (3) is based, as much as possible, not on hypothetical human-like "artificial intelligence," but on a framework much of which can be tested with hardware and simple algorithms resembling those we possess today. Indeed, a prototype of this system can probably be developed using Lego(tm) robot kits, lasers, photosensors, sonar, video cameras, and other off-the-shelf hardware along with some custom computer programming using known algorithms.

Using such a prototype framework I hope that we can test out new legal rules and political systems in contests without harming actual humans. I also hope that we can test the extent to which law can be mechanized and to which security technology can prevent or provide strong evidence for breaches of law.

What about possible application to real-world security systems and human laws? In technical terms, I don't expect most of these mechanical laws to be applied in a robotic per se fashion to humans, but rather mostly to provide better notice and gather better evidence, perhaps in the clearest cases creating a prima facie case shifting the burden of lawsuit and/or the burden of proof to the person against whom clear evidence of law-breaking has been gathered. The system may also be used to prototype "smart treaties" governing weapons systems.

The main anthropomorphic assumption we will make is the idea that our robots can be motivated by incentives, e.g. by the threat of loss of property or of personal damage or destruction. If this seems unrealistic, think of our robots as being agents of humans who after initially creating the robots are off-stage. Under contest rules the humans have an incentive to program their robots to avoid damage to themselves as well as to avoid monetary penalties against their human owners; thus we can effectively treat the robots as being guided (within the limits of flexibility and forethought of simple algorithms) by such incentives.

The traditional trespasses are based on surfaces: a trespass on real property breaks a spatial boundary of that property, and a battery touches the skin or clothes or something closely attached to same (such as a tool grasped in the hand). Corresponding to such laws we have have developed a variety of security devices such as doors, locks, fences, etc. that provide notice of a surface and sometimes provide evidence that such a surface has been forceably crossed. The paradigmatic idea is "tres-pass", Law French for "big step": a crossing of a boundary that, given the notice and affordances provided, the trespasser knew or should have know was a violation of law. In our model, robots should have been programmed to not cross such boundaries without owner consent or other legal authority, ideas to be explored further below.

One way to set up this system is as contests between human programmers deploying their robots into the robot legal system. In a partially automated system there would be some human-refereed "police" and "courts," but an ideal fully-automated version would run without human intervention. Under contest rules in the fully-automated mode, after the human creators, who had an incentive to program the robots to respond to incentives, are done with their programming and the contest has commenced, only robots and other things exist in this robotic legal system. Only robots are legal persons; as such they can own other things and devices as well as spatial regions and things fixed therein (real property).

For each type of tort in my robot legal system, I propose a class of surface or other physical condition and correspondong class(es) of sensors:

(1) battery : physical surface of the robot (sensor detects touch). This touching must be the result of a physical trajectory that was probably not accidental (e.g. bumping a fellow robot on within a crowded space is probably not a battery).

(2) trespass to real property : three-dimensional surface of laser-break sensors bounding a space owned by a robot, or other location-finding sensors that can detect boundary crossings.

(3) trespass or conversion of moveable property: involves touch sensors for trespass to chattels; security against coversion probably also involves using RFID, GPS, or more generally proplets in the moveable property. But this is a harder problem, so call it TBD.

(4) assault is a credible threat of imminent battery : probably involves active and passive viewing devices such as radar, sonar and video and corresponding movement detection and pattern recognition. Specifics TBD (another harder problem).

Note that we have eliminated subjective elements such as "intent" and "harm." The idea is to replace these with deductiions from physical evidence that can be gathered by sensors. For example, evidence of a boundary crossing with force often in itself (per se) implies probable intent, and in such cases intent can be dispensed with as a conjunctive element or can be automatically inferred. With well-designed physical triggering conditions and notices and affordances to avoid them, we can avoid subjective elements that would require human judgment and still reach the right verdict in the vast majority of cases. In real world law such notices, affordances, and evidence-gathering would allow us to properly assign the burden of lawsuit to the prima facie lawbreaker so that a non-frivolous lawsuit would only occur in a miniscule fraction of cases. Thus security systems adapted to and even simulating real-world legal rules could greatly lower legal costs, but it is to be doubted that they could be entirely automated without at least some residual injustice.

Our robotic contract law is simply software code that the robots generated (or their human programmers have previously coded) and agree to abide by, i.e. smart contracts.

Three basic legal defenses (or justifications) for a surface break, taking of moveable property, or assault are:

(1) consent by the owner, expressed or implied (the former requires only some standard protocol that describes the surface and conditions for activity within that surface, i.e. a smart contract; I'm not sure what the latter category, implied consent, is in robot-land, but the "bumping in a crowded space" is probably an example of implied consent to a touch).

(2) self-defense (which may under certain circumstances include defense of property if the victim was illegally within a surface at the time), or

(3) other legal authorization.

The defense (3) of legal authorization is the most interesting and relates strongly to how robots are incentivized, i.e. punished and how robots are compensated for damages. In other words, what are the "police" and "courts" that enforce the law in this robotic world? Such a punishment is itself an illegal trespass unless it is legally authorized. The defense of legal authorization is also strongly related to the defense (2) of self-defense. That will be the topic, I hope, of a forthcoming post.

Subscribe to:

Posts (Atom)